I’m in the cloud, and I am getting cold and wet, it’s raining in my cloud.

Cloud services are supposed to be the saviour for corporations, and, I dare say there are a lot of really good real world examples where this is the case.

Take companies where they need extra processing power, as and when they need it, such as AWS,(Amazon Web Services) or Google Apps, or even, (for me – shock and horror) something like Office 365, which is all well and good.

However, let’s take me as a not so good example…

I would say that I’ve never had a normal setup for some regular Joe at home, granted, but, lets look at what I need.

Backup….. Well, sorta, it’s more like making sure I have multiple copies, but those multiple copies get synchronised across multiple devices, my MacBook, my Linux laptop, my iPad, and my iPhone., and maybe even a Windows machine if need something desperately to use Windows.

In short, I need a cleaner way of keeping all files on the machines up to date, so I’ve looked at Dropbox, Google drive, and even Apple cloud services.

So, lets look at the figures.

Dropbox, 500GB is $49.99 per month, if you pay in advance for a year.

Apple’s cloud services top out at 55GB, which isn’t even enough to back up the entire contents of my 64GB iPad – eh , what ????

Lets look at Google Drive, that is $49.99 for 1TB.

Question I guess is how much data I have.

Ok – when I first started to write this entry, the Documents directory on the laptop that I was writing this entry on was 3.6GB, so within the “free” range of all the available options, but as I have consolidated all my documents across a number of devices, that is now 5.8GB, which is now over that 5GB of free space.

However, this 5.8GB doens’t include my photographs – those, they take a little more than that – like over 100x as much at currently 450GB(ish) – and that includes all the RAW as I shoot in RAW format (and used to shoot in RAW+JPG)

That 450GB is a lot of data, and therefore well exceeds the Apple cloud offering by some considerable margin, but comes in the 500GB bracket for Dropbox and Google.

Now, when I go on a shoot, say in Wales or the Lake District, or say an air show, then I can easily burn through 24GB a day in photographs.

Ok, some of these are rubbish, that I really should get rid of, but, historically, I haven’t.

Taking that into consideration, I will easily burn through Dropbox’s “sane” offering, at least without having the 1TB option as a “team”.

That leaves me Google.

No real problem there.

But – let me look at another option.

I host my own gear, actually on the server that this website runs on.

It sits in a datacentre somewhere in the EU – Germany to be precise, (not that I am giving anything away here – it’d be trivial to lookup the IP address of this site and work it out)

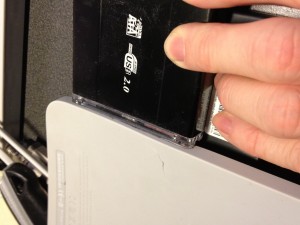

Given that I have a bit of space left on the drives that this site lives on, so…. I installed “OwnCloud”.

From Ownclouds own description, it’s effectively an Open Source Dropbox-a-like piece of software that lives on an apache server and can turn that Apache server into a cloud instance, and OwnCloud has clients for Windows, Mac, Linux, iPhone/iPad and Android devices.

So – from the outside appearance, I can run my own cloud data storage services on a device I rent – i.e. this server in Germany.

I’ll admit this server doesn’t have the disk space to hold all my photographs – but I can easily purchase an upgraded machine, migrate all my stuff across, and then it would do, at least for another couple or three years I reckon.

So, for the moment, I have installed this server software, and a client for my Linux laptop, and my MacBook, and even my iPhone.

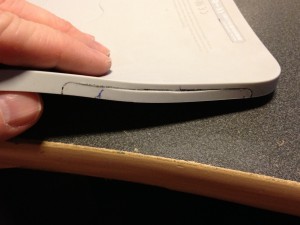

I have therefore started to syncing up this laptop, and that is where I have hit the biggest single failure in ANY cloud storage service.

Speed.

Non, not speed of the server at the remote end, or even the speed of the local machine running the client.

But, the speed of my link to the Internet.

No matter where I am, away from a corporate environment, like “home”, I have ADSL, and by the name of it, it’s “asynchronous” – so, the speed is faster one way than the other, in the case of ADSL, download from the Internet is the faster, by quite some way.

For example, the link I am on at the moment, is 4.4Mbs/ down and 448kbps up.

So, if I want to download an iso image for a new OS that I am looking at, or even the new Adobe Photoshop elements, that means I can download the files at 4.4Mbs/8bits = 550kB/s.

That means that a 1MB file will download in a shade under 2 seconds, but a 4GB DVD iso will take about 2 ½ hours.

That’s not too unreasonable as a general thing, I don’t need huge files often.

But, let me translate that to my problem.

My Documents directory will take around 2 hours to download.

However, that would be the end of the issue if I already had those files on the server, which at the moment I don’t.

I need to upload them……

…. at 448kb/s – which is a pathetic 55kB/s – that will take what ~25 hours ….

A DAY!!!!!

There may be some companies where they wouldn’t mind me going into work, plugging my personal laptop into the network during lunch say, or otherwise out of hours, and throw my ~4GB to any cloud storage service, without having a complete hissy fit either from a security perspective, or a bandwidth perspective.

However – these companies are likely to be the exception rather than the rule, so I’m stuck, personally, with any options for cloud storage.

I sure ain’t going to start talking about the whole Bring Your Own Device argument either, not here, not yet – it’s not the place, or crucially, the time.

The cloud works wonderfully within a corporate or educational/research environment, for say sharing documents, with colleagues in London, Leeds or even Los Angeles, as businesses and educational/research establishments will have fast, synchronous connections to the Internet, and in some instances, (like where I work) actually be part of the Internet themselves.

However, back to poor old me, and my requirement to use the cloud.

It will take me ~25hours to “seed” my cloud instance from this one machine, and this little laptop is a machine I don’t tend to have “much” data on – and it only includes my Documents, and nothing like my Music, that’s ~12GB, and of course it doesn’t include my 450GB of photos.

And therein lies the problem with the cloud – it’s not the cloud where it’s raining, it’s under the cloud, me, and you, at home, wanting to upload our data to the cloud.

Great when the data is already there, like Google Apps, but getting it up into the cloud, like my documents and photographs, is a giant pain in the back side due to the lack of speed on the network that has to be used to upload it.

Now, be clear, I am not going to blame this on the cloud, the cloud itself isn’t the problem here, not by a long shot, but on broadband, and the nature of the beast, a beast that is now severely flawed.

ADSL started life when all that one really needed to do was to download data from the Internet, and since about 1993 that is all most people have ever done.

However, the cloud is changing the game, for the better in a lot of ways, trouble is, the networks that were built to service the Internet for consumers historically, are now woefully inadequate to be able to keep up with the demand for data flow, and are essentially not fit for the new purpose of this, the “new” Internet and the new world order of “cloud” – certainly not cloud storage anyway, cloud applications, that is a different matter – that is all about sending data from the Internet to the clients.

One thing to look forward to is fibre connectivity.

If you can get it.

I couldn’t – well, at least not until June this year, as BT appeared to have screwed up with the rollout of FTTC – otherwise known as Fibre To The Cabinet, in my area – they missed a cabinet out in my, the newest of estates in the town I live in.

There are people in outer lying areas that have this capability – but no, not me, or anyone in my estate, and it’s taken BT a very long time to undo their screwup of missing out upgrading this one cabinet.

30 June 2013 is the date I might have been able to get Fibre based broadband to home, and yes, this will massively improve this ability to upload my files to the cloud storage provider of my choice.

Even then though, it will only be ~15Mbps – 1.8MB/s, which admittedly, will still mean it will take me a long time to upload my photographs – shade under 3 days, but under ½ an hour to upload my documents, a distinct improvement.

Like real clouds, the Internet Cloud, it’s great when you are in it, like I am at work, but for me, at least home-wise at the moment, whilst it isn’t raining in the cloud, I definitely am under a cloud, and I feel a little cold and damp as a result.